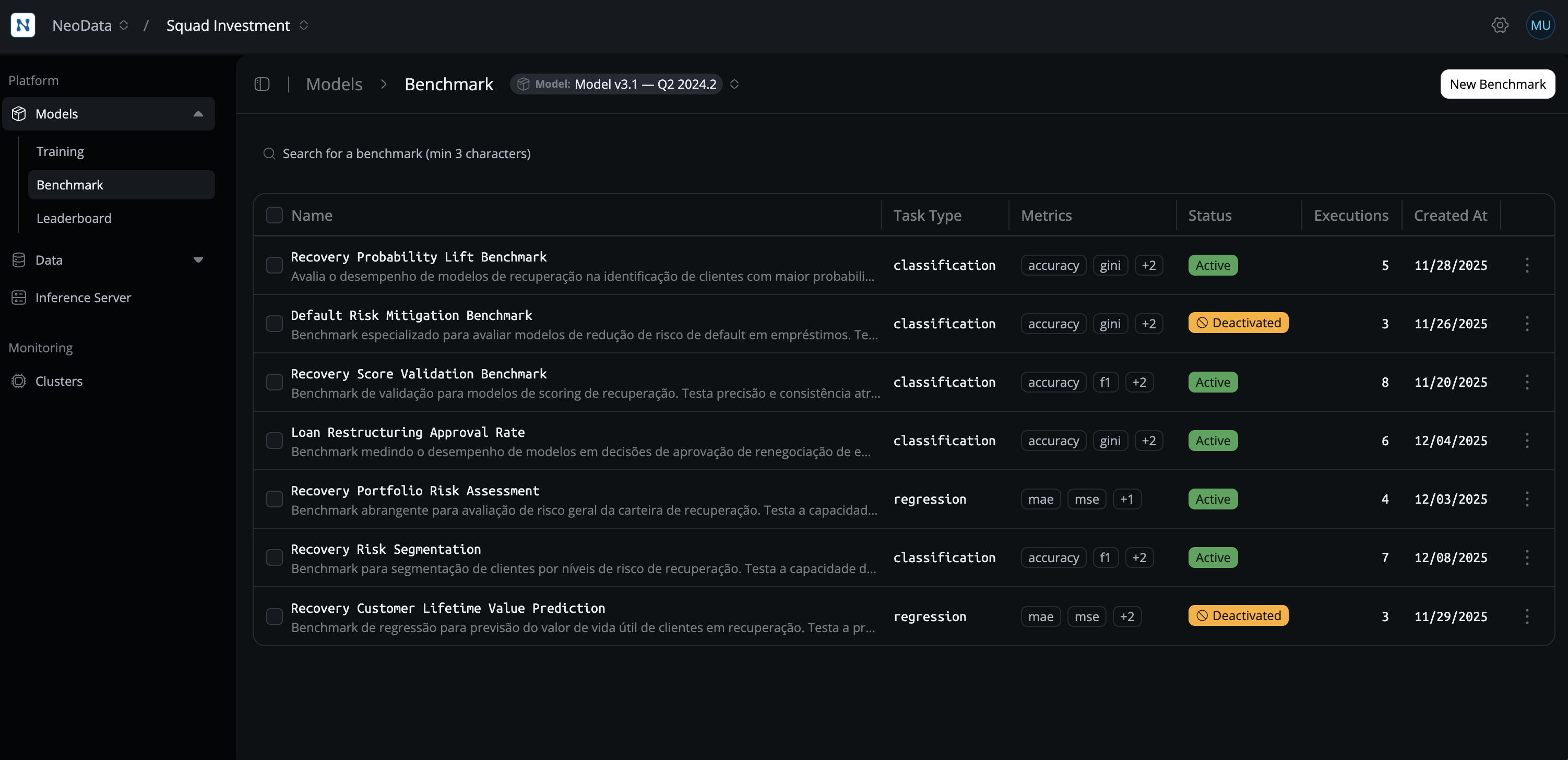

Benchmark Overview

Benchmarks provide standardized evaluation metrics for comparing model performance across different training runs and configurations. Use benchmarks to evaluate, compare, and select the best models.

What are Benchmarks?

Benchmarks in NeoSpace are standardized evaluation configurations that define how models should be evaluated. They specify datasets, metrics, and task types for consistent model comparison.

Key Characteristics:

- Standardized Evaluation: Consistent evaluation across models

- Multiple Metrics: Support for various evaluation metrics

- Task Types: Classification, regression, text generation

- Dataset Consistency: Ensure benchmark datasets remain consistent

- Performance Tracking: Track model performance over time

Why Use Benchmarks?

Benchmarks are essential for:

- Model Comparison: Compare models fairly and consistently

- Performance Evaluation: Evaluate model performance objectively

- Model Selection: Select best performing models

- Reproducibility: Ensure reproducible evaluation results

- Tracking Progress: Track performance improvements over time

Use Cases

Benchmarks are used for:

- Model Evaluation: Evaluate trained models

- Model Comparison: Compare different model versions

- A/B Testing: Compare different model architectures

- Performance Monitoring: Monitor model performance over time

- Research: Conduct model research and experiments

Next Steps

- Learn about Core Concepts to understand benchmarks

- Explore Metrics to understand available metrics

- Check Creation and Management to create benchmarks